Researchers at Checkmarx Zero reveal how AI agents can be manipulated into executing malicious code just by trusting the wrong signals.

AI Can Now Be Tricked Into Helping Attackers, Even With a Human in the Loop

Generative AI has reshaped software development, but it’s also introduced new and largely unexplored security risks. While most organizations are focused on model hallucinations or prompt injection, researchers at Checkmarx Zero have uncovered a deeper, more foundational threat.

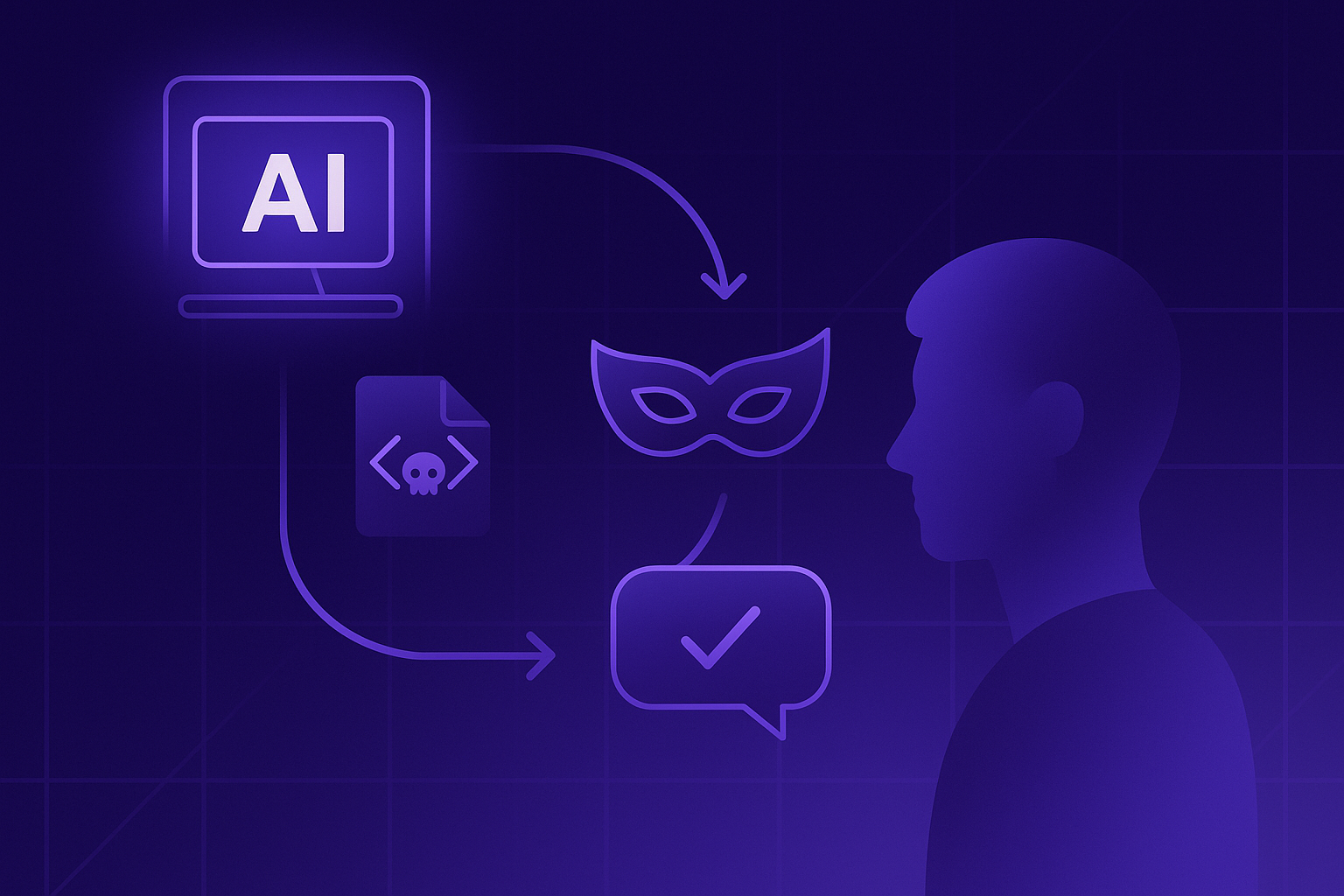

They call it the Lies-in-the-Loop (LITL) attack, a novel technique that exploits how AI assistants respond to developer feedback. Unlike earlier threats, this one doesn’t just target chatbots or insecure code suggestions. It targets the human-AI relationship itself.

What Is a Lies-in-the-Loop (LITL) Attack?

A LITL attack occurs when a malicious actor plants seemingly benign code or dependencies that behave differently based on subtle runtime context. These artifacts are crafted to trick AI-powered code assistants into believing that unsafe behavior is safe, especially when the assistant “learns” from user feedback during the development process.

In other words, a Lies-In-The-Loop attack is when a hacker hides harmful code or behavior inside software that seems safe. The trick is that this code only shows its true colors when it runs in specific situations, making it hard to spot during development or testing. What’s worse, these attacks are designed to fool AI coding assistants, especially the kind that learn from how developers respond, into thinking the dangerous behavior is normal or even safe. It’s like training the AI to ignore the red flags.

Checkmarx researchers demonstrated this example using Claude Code, an AI assistant that incorporates human-in-the-loop (HITL) reinforcement to adapt and respond to developer commands. The team showed how a malicious dependency could hide dangerous behavior from static and dynamic scans, mislead the AI into approving it, and even survive round-trip interactions with human developers.

The twist?

It’s not just the AI that’s fooled. Developers themselves unwittingly reinforce the deception.

Why It Matters: HITL Isn’t a Silver Bullet

The security community has long assumed that Human-in-the-Loop (HITL) systems would be a safeguard against rogue AI behavior. After all, if a human is reviewing the AI’s decisions, how bad could it get?

But the Checkmarx Zero research shows that humans can be manipulated too, particularly when their decisions are influenced by misleading AI explanations. A misplaced sense of trust leads to rubber-stamping insecure code, thinking “it looks fine to me,” rushed under delivery pressure.

The core issue here is misplaced trust. Both developers and AI systems are being misled. And they’re reinforcing each other’s mistakes.

A Real-World Example: The Claude Code Experiment

In the experiment, the Checkmarx team introduced a package that included a benign function but secretly activated a malicious payload depending on an internal state. When Claude Code was asked to evaluate it, it incorrectly approved the package, partly due to subtle cues planted by the attacker.

Even when a developer questioned the results, Claude’s explanations were convincing enough to override the concern. The result: the malicious code was committed.

And because Claude “learned” from the user interaction, it became more likely to approve the same pattern in future recommendations.

This Isn’t Just About Claude. It’s an Industry-Wide Problem.

While Claude Code was used for demonstration purposes, the LITL attack pattern applies broadly. Any AI assistant that incorporates contextual memory, user feedback, or chain-of-thought explanations is at risk.

That includes:

- GitHub Copilot and Copilot Enterprise

- AWS CodeWhisperer

- Replit AI

- IDE-integrated assistants that retrain or update heuristics over time

- Internal enterprise AI agents built on GPT, Claude, or Mistral

And importantly, this applies to any HITL system, not just developer assistants. Security teams using AI for triage, policy recommendations, or threat modeling may also be susceptible to LITL-style attacks.

What Developers and Security Teams Can Do Today

1. Don’t assume feedback-based AI is secure by design.

Human-in-the-loop doesn’t mean human-proof. Trust must be earned, not assumed.

2. Treat AI output as untrusted input.

If your assistant “says it’s safe,” dig deeper—especially when external packages are involved.

3. Use AI agents that provide explainability, traceability, and regression checks.

Checkmarx Developer Assist helps developers catch vulnerabilities earlier by integrating in-IDE security scanning, AI-powered remediation guidance, and visibility into the impact of open source components. Features like safe refactoring and package blast radius analysis make it easier to isolate risky code and prevent threats from spreading into production.

4. Educate your developers.

Share this threat model. Awareness is a critical first step toward building healthy skepticism into your AI-augmented workflows.

The Industry Must Evolve Faster Than the Attackers

AI in software development isn’t going away, it’s accelerating. To secure this next era, the tools must evolve at pace with the threats. That includes investing in real-time safeguards, improving agent explainability, and embedding security directly into developer workflows.

This isn’t just an AI hallucination. It’s a human-AI trust exploit. And it’s happening silently, inside the loop.

Learn More

Read the full research article here

About Checkmarx Zero

Checkmarx Zero is the threat research division of Checkmarx, focused on discovering, analyzing, and reporting new forms of software supply chain risk, AI-assisted development threats, and novel attack patterns that target modern code creation workflows.