The person who writes the code shouldn’t be the one who signs off on its security

As GenAI tools revolutionize how code is written, engineering leaders face a new wave of questions.

- Is AI-generated code safe?

- Who’s reviewing what GenAI suggests?

- Can the tool that generated the code be trusted to validate it?

According to a 2025 IDC report, the behavioral pattern of “vibe coding,” in which developers are using AI assistants, is a catalyst for increasingly accepting code with limited scrutiny and prioritizing speed over validation. While GenAI undeniably boosts developer productivity, this shift introduces real security risks. “Developers assemble or accept code with limited scrutiny,” Katie Norton, IDC.

It’s not just a theory, it’s happening at scale.

| Model | Correct & Secure | Correct Only | % Insecure of Correct |

| OpenAI g3 | 47.8% | 51.6% | 26.7% |

| Claude 3 Sonnet | 40.7% | 52.1% | 24.1% |

| GPT-4.1 | 41.1% | 55.1% | 25.6% |

| Gemini 1.5 Pro | 33.8% | 60.2% | 21.4% |

https://baxbench.com/ June 2025

The Rise of “Vibe Coding,” and Why It’s a Problem

DC’s 2024 Generative AI Developer Study reports that developers using GenAI tools achieve a 35% productivity boost, often matching the output of 3–5 engineers. However, many of those AI-assisted outputs are deposited in the repository unchecked, some of which are insecure, some noncompliant, and some outright dangerous.

This risk is also validated by independent security benchmarks. In tests across multiple large language model developer assistants, up to 70% of AI-generated code was found to be insecure or flawed when evaluated using secure coding baselines (source: June 2025 BaxBench, 2025 ). At the same time, enterprise adoption is accelerating, with analysts projecting that 90% of enterprise engineers will use GenAI tools by 2028. Code volume is exploding, but validation isn’t keeping pace.

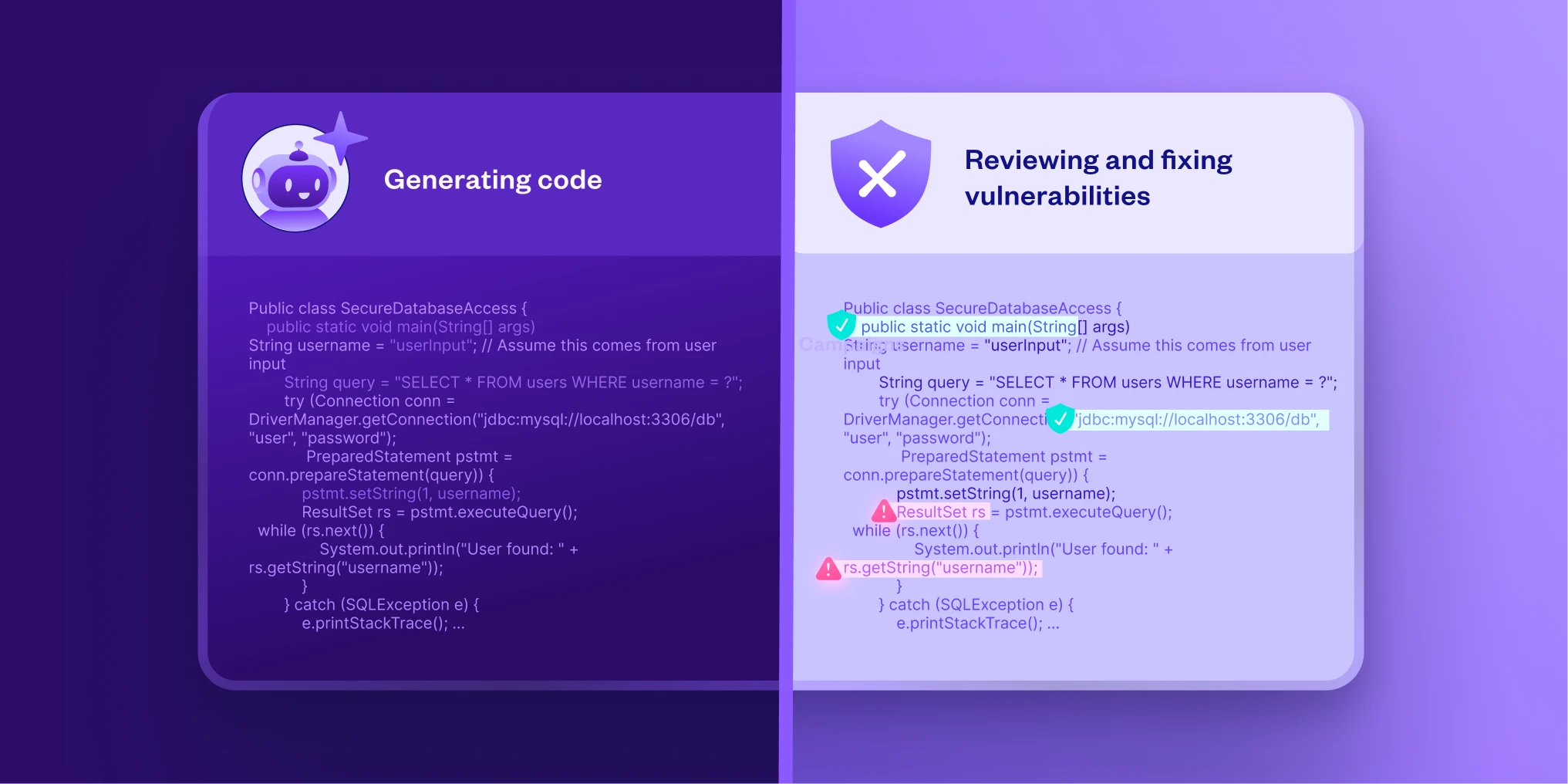

Code Generators Aren’t Built to be Code Reviewers

Tools that generate code excel at accelerating development. They scaffold boilerplate, offer real-time completions, and help developers explore frameworks. But they weren’t built to perform secure code validation.

They don’t:

- Enforce your AppSec policies

- Validate against known CVEs or malicious dependencies

- Assess license compliance

- Create audit trails or enforce remediation workflows

- Block risky commits based on code context

Even GitHub makes it clear: Copilot suggests code but doesn’t secure it. Most GenAI tools optimize for speed, not secure validation, policy enforcement, or risk oversight. Even when layered with basic guardrails, they can’t guarantee protection at the scale or specificity required in real-world CI/CD environments.

AI code generation is creative; secure software demands consistency, not improvisation. The recent IDC Report puts it clearly: “As AI becomes part of the development process itself, organizations must adapt their security practices to keep pace with faster and less predictable workflows.” Security can’t be tacked on as an afterthought or handled by the same tool that wrote the code in the first place.

Checkmarx Assist: Agentic AI That Acts, Not Just Suggests

If you want your AppSec to be autonomous and proactive, this is where Checkmarx One Assist comes in. Unlike generative tools that suggest code, Checkmarx Assist is agentic AI built on the Checkmarx One platform, designed to evaluate, enforce, and remediate based on trusted security intelligence and organizational policy.

With Checkmarx Assist, you have:

- Vulnerability detection directly in the IDE (even before code is committed)

- Fix suggestions enriched with context and guided explanations

- Auto-generated, compliant pull requests

- Security actions aligned to policy, with audit trails and platform-wide oversight

And it’s not just one agent, it’s family:

- Developer Assist Agent: Works in the IDE to secure code pre-commit

- Policy Assist Agent: Applies AppSec rules and gates across the CI/CD

- Insights Assist Agent: Surfaces metrics like MTTR, risk posture, and fix rates

These capabilities are built on top of Checkmarx’s proven AppSec engines (SAST, SCA, IaC, and Secrets) and backed by a threat intelligence network that monitors over 400,000 known malicious packages.

Separation of Duties: Still Non-Negotiable in AppSec

You wouldn’t let a developer merge their own PR without a second pair of eyes. You wouldn’t let your accounting team audit their own numbers. If GenAI is the author, it shouldn’t be the reviewer. Checkmarx

Assist gives your team the security partner it needs:

- A second set of eyes

- Independent risk detection

- Policy-enforced action

- Full coverage across the SDLC

Organizations using Checkmarx Assist report fewer vulnerabilities, higher remediation rates, and improved DORA metrics, such as lead time and change failure rate, without slowing delivery velocity.

Checkmarx One Assist not only remediates security issues like malicious packages and secrets in real time, it also suggests surrounding code fixes to resolve any breaking changes caused by the remediation.

What the Data Tells Us: Real ROI from Risk Reduction

Security is measurable, and the numbers speak volumes.

When teams rely solely on GenAI code assistants, they may accelerate output but miss critical context, governance, and enforcement. The results can be costly. From rework and regression to unpatched vulnerabilities and license violations, the downstream risks add up fast.

That’s why Checkmarx Assist was benchmarked not only for its security precision, but for its impact on real-world development and remediation economics.

Vulnerability Remediation

Engineering teams that rely only on GenAI tools often need to manually review and correct insecure suggestions, which increases time spent and risk exposure. Weekly developer time spent remediating vulnerabilities averages around $375 per developer, and without context-aware validation, 1 in 4 fixes still introduces a security flaw.

With Checkmarx Assist layered in, remediation becomes:

- Faster, thanks to pre-commit detection in the IDE

- More accurate, with secure-by-default code fixes aligned to policy

- Less risky, dropping flaw rates from 25% to 5%

This translates into a risk-adjusted weekly savings of over $200 per developer, while materially improving your mean-time-to-remediate (MTTR).

| Scenario | Weekly Cost (Time) | Security Flaw Risk per Fix | Risk-Adjusted Weekly Cost |

| Copilot Only | $375 | 25% | $506.25 |

| Copilot + Checkmarx Assist | $270 | 5% | $297.00 |

| Risk-Adjusted Cost | $1,012 | $506 | $297 |

Open Source Package Validation

Open source is foundational – but risky when mismanaged. License compliance violations, known-vulnerable packages, and malicious dependencies are easy to miss when code is accepted without inspection.

Teams using GenAI alone spend more than an hour per evaluation, often still missing critical red flags. The cost: up to $337.50 per developer, per week in risk-adjusted impact.

| Scenario | Without AI | GenAI Only | GenAI + Checkmarx Assist |

| Time per evaluation | 1.5 hrs | 1.25 hrs | 0.5 hrs |

| License risk | 15% | 15% | 5% |

| Malicious package risk | 20% | 20% | 5% |

| Risk-adjusted weekly cost | $405 | $337.50 | $110.00 |

Close the Gap. Don’t Just Hope for the Best.

If your GenAI tool is the racecar, Checkmarx Assist is the seatbelt, speedometer, and crash test validation, all built in. The result is a 67% reduction in risk-adjusted cost, along with stronger coverage and less manual overhead. These gains aren’t just productivity-based, they’re risk-adjusted cost reductions that reflect fewer vulnerabilities, faster fixes, and fewer post-deployment fire drills.

Book a demo of Checkmarx Assist today and see how agentic AI gives your AppSec program the visibility, control, and automation it needs to stay ahead. In an era of vibe coding and machine-speed development, your security tooling can’t afford to watch from the sidelines. Empower it to act.

If your developers generate code with AI, consult a Checkmarx expert.